Hackathoning my way to publishing an NPM package…

Postman – The Bearer of good news…

Postman and Lodash – The perfect partnership

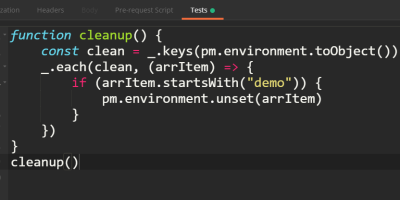

Dynamically unset Postman Environment Variables

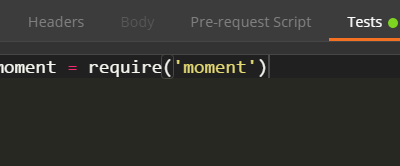

Hold on, wait a moment…

Mission Accomplished

Hi there – I’m here to help…

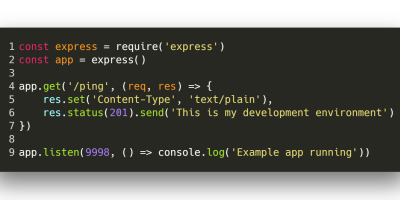

Just fiddling around…